Reports are piling up over Tesla cars braking unexpectedly while driving. This problem is described as a phantom break. It happens when the Advanced Assist System (ADAS) hits the breaks for no good reason.

Complaints are coming in and the US government is investigating those claims. This problem is being looked at by the NHTSA – National Highway Traffic Safety Administration.

The Problem

When the self-driving system applies the breaks while driving, it could lead to catastrophic events. This scenario is quite dangerous on the open road when someone is following too close behind you.

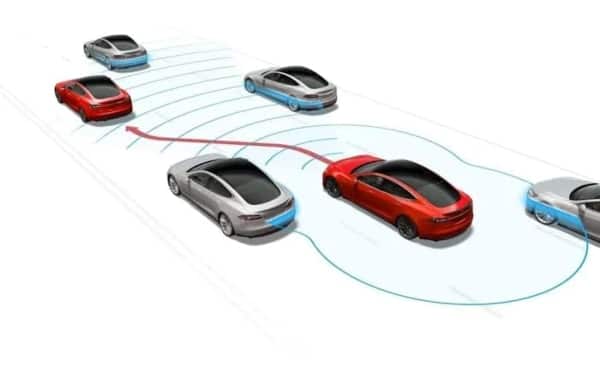

The auto pilot is smart enough so that when it detects obstacle in front of the car it will break. So, it seems like the system can falsely detect an object and hit the breaks. It does this so it can avoid collision. And that’s all good, except that there is not an object in sight. Thus the name “Phantom Breaking”.

This happens when the car is set to drive on Autopilot mode. Most of the Tesla owners would state that this problem is manageable for the most part. These types of events would be few and far between.

But lately the frequency of this scenario happening is getting more and more frequent.

The Complaints

The NHTSA received 354 complaints in the past nine months. So the investigation will cover approximately 416,000 Tesla Model 3 and Model Y vehicles from 2021-2022.

But that’s not all. Tesla is being investigated over two other matters. Last year in December, it disabled its Passenger Play feature that allowed games to be played on its touchscreen while the car is driving. This led to an open investigation covering 580,000 vehicles.

In August of 2021 the agency started investigating the role of the Autopilot system in 11 crashes. This event caused another open investigation of 765,000 Tesla cars.

The Repercussions

Just last month, Tesla decided to pull a new version of its Full Self-Driving (FSD) Beta software. This was a direct result of the many testers reporting the phantom braking issue. This problem was acknowledged by Elon Musk himself.

It is becoming clear that the phantom breaking is a significant problem for Autopilot users.

NHTSA Complaint:

“Upon accepting delivery at the end of May we have accumulated 9,000 miles on the car and have a had horrible experiences with the traffic aware cruise control slamming on the brakes for no apparent reason with nothing ahead or passing cars. Behavior can be 5-10 mph slowdowns or in some cases FULL brake pressure which puts us in danger of being rear ended. Multiple times we have been close to rear-ended.”

A lot of the Tesla owners did react. They reached out to Tesla regarding this issue, but they were told that it is due to “software evolving”

“While driving using cruise control the vehicle will occasionally brake suddenly for unknown reasons. In one instance I was worried that the car following me would either hit my car or be forced to take other action possibly causing an accident. When I contacted Tesla regarding my concern they said something about the software program evolving…no fix available.”

What is interesting in this response is the “software evolving” part. Because the issues do coincide with the time that Tesla transitioned to its vision-based Autopilot. Thus, causing the company to drop the use of the radar.

Be that as it may, the complaints from the users are very similar. They are all experiencing this phantom break problem more and more often.

In one complaint from 11 February 2022, the driver says:

“Heavy braking occurs for no apparent reason and with no warning, resulting in several near misses for rear end collisions… this issue has occurred dozens of times during my five months and 10,000-mile ownership.”

In another dated 3 February 2022, the user complains on the phantom breaking issue, stating that their car suddenly decelerated from 73mph down to 59mph in two seconds.

At the moment there are no registered crashes, injuries or fatalities as a result of these incidents. But the problem with the Autopilot mode must be fixed as soon as possible. This hardware was first introduced in Tesla’s vehicles in September 2014. Since then it evolved. The company released more and more advanced features through over-the-air software updates.

The system has features like Autosteer, Autopark and Trafic-Aware Cruise Control (TACC). It is quite advanced and some drivers are heavily relying on it.

What is inside the iPhone 14 Series?

TechInDeep News:

The Finance

Tesla’s stock (TSLA) dropped by more than 3% following the report published on February 2. Although Tesla did not comment on the issue, Elon Musk did admit the problem.

Earlier this month, Tesla revealed that it received an SEC subpoena in November 2021, shortly after Musk conducted a Twitter poll asking whether he should sell 10% of his shares.

Tesla shares were down over 4% on Thursday following the news of the latest federal safety probe.

And it seems like Elon Musk is not always taking this seriously. For example, after a recent recall of a feature called “Boombox” Tesla CEO Elon Musk characterized NHTSA as the “fun police”.

The fun police made us do it (sigh)

— Elon Musk (@elonmusk) February 13, 2022

This so called “Boombox” feature allows Tesla owners to blast silly sounds out of their cars as they drove. As a result, pedestrians may not be able to hear a legally mandated pedestrian warning system sound if this feature was in use. So, the company had to recall this feature.

Conclusion

The phantom breaks issue is a huge problem for Tesla. That brought on another investigation from the NHTSA which resulted in a crash of Tesla stocks. Owners are filing complaints over the phantom breaking in the middle of the road, which could be caused by Tesla’s decision to move on from using the radar. It seems like the new update that uses vision based Autopilot could be doing this.

Although there are no accidents reported, as a consequence of this bug, Tesla must respond promptly and update their Autopilot software.

Tesla has issues 10 voluntary recalls in the U.S over the past four months. And this new vision-based feature might be next, unless they find a way to fix it.

MAKECOMMENT